Next Up, The Brain

when altering the human genome isn't enough...

The universe works in mysterious ways. Was currently spending a lot of time on this topic, then the two following articles show up in my news feed.

Source: Unlimited Hangout

Weaponizing Reality: The Dawn of Neurowarfare

Stavroula Pabst explores the race to apply emerging neurotechnologies, such as brain-computer interfaces (BCIs), in times of both war and peace, expanding conflicts into a new domain — the brain — while perhaps forever changing humans’ relationship with machines.

March 21, 2024

Billionaire Elon Musk’s brain-computer interface (BCI) company Neuralink made headlines earlier this year for inserting its first brain implant into a human being. Musk says such implants, which are described as “fully implantable, cosmetically invisible, and designed to let you control a computer or mobile device anywhere you go,” are slated to eventually offer “full-bandwidth data streaming” to the brain.

Brain-computer interfaces (BCIs) are quite the human achievement: as described by the University of Calgary, “A brain computer interface (BCI) is a system that determines functional intent – the desire to change, move, control, or interact with something in your environment – directly from your brain activity. In other words, BCIs allow you to control an application or a device using only your mind.”

Developers and advocates of BCIs and adjacent technologies emphasize that they can help people regain abilities lost due to aging, ailments, accidents or injuries, thus improving quality of life. A brain implant created by Swiss-based École Polytechnique Fédérale in Lausanne (EPFL), for example, has allowed a paralyzed man to walk again just by thinking. Others go further: Neuralink’s goal is to help people “surpass able-bodied human performance.”

Yet, great ethical concerns arise with such advancements, and the tech is already being used for questionable purposes. To better plan logistics and boost productivity, for example, some Chinese employers have started using “emotional surveillance technology” to monitor workers’ brainwaves which, “combined with artificial intelligence algorithms, [can] spot incidents of workplace rage, anxiety, or sadness.” The example showcases how personal the technology can become as it is normalized in daily life.

But the ethical ramifications of BCIs and other emerging neurotechnologies don’t stop at the consumer market or the workplace. Governments and militaries are already discussing — and experimenting on — the roles they could play in wartime. Indeed, many are describing the human body and brain as war’s next domain, with a 2020 NATO-backed paper on “cognitive warfare” describing the phenomenon’s objective as “mak[ing] everyone a weapon…The brain will be the battlefield of the 21st century.”

On this new “battlefield,” an era of neuroweapons, which can broadly be defined as technologies and systems that could either enhance or damage a warfighter or target’s cognitive and/or physical abilities, or otherwise attack people or critical societal infrastructure, has begun.

In this exploration of the race to apply the latest neurotechnologies to war and beyond, I investigated how the neuroweapons of tomorrow, including BCIs that may allow for brain-to-brain or brain-to-machine communication, have the capacity to expand conflicts into a new domain — the brain — while also bringing a new dimension to both hard- and soft-power struggles of the future.

In response to ongoing neurotechnology developments, some allege “neurorights” will protect peoples’ minds from possible privacy infringements and myriad ethical issues that new neurotechnologies may pose in the years to come. However, neurorights advocates’ close proximity to the very organizations advancing these neurotechnologies deserves scrutiny and potentially suggests that the “neurorights” movement is poised instead to normalize advanced neurotechnologies’ presence in daily life, perhaps forever changing humans’ relationship with machines.

The Military–Intelligence Complex’s Decades-Long Pursuit of Neurowarfare

Indeed, neuroscience’s very origins lie in war. As Dr. Wallace Mendelson explains in Psychology Today, “Just as American neurology was born in the Civil War, the roots of neuroscience are embedded in World War II.” He explains that while the bond between war and neuroscience has contributed to meaningful advances for the human condition, like the improved understanding of ailments like post-traumatic stress disorder (PTSD), it has left some worried about neuroscience’s possible military applications.

Controversial yet well-known government attempts to learn more about the brain include Project Bluebird/Artichoke, a 1950s era project that worked to determine whether people could be involuntarily made to carry out assassinations through hypnosis, as well as the especially infamous MK Ultra, where human mind control experiments were carried out in a variety of institutions in the 1950s and 60s. These projects’ respective conclusions, however, did not signal an end to the US government’s interest in invasive mind studies and technologies. Rather, governments internationally have been interested in the brain sciences ever since, investing heavily in neuroscience and neurotech research.

Initiatives and research explored in this article, like the BRAIN Initiative and the United States Defense Advanced Research Projects Agency’s (DARPA) Next-Generation Nonsurgical Neurotechnology (N³), are often portrayed as altruistic strides towards improving brain health, helping people recover lost physical or mental abilities, and otherwise improving quality of life. Unfortunately, a deeper look reveals a prioritization of military might.

Enhance…

The military is intensely interested in emerging neurotechnologies. The Pentagon’s research arm DARPA directly or indirectly funds about half of invasive neural interface technology companies in the US. In fact, as Niko McCarthy and Milan Cvitkovic highlight in their 2023 writeup of DARPA’s neurotechnology efforts that DARPA has initiated at least 40 neurotechnology-related programs over the past 24 years. From the Interface describes the current state of affairs as DARPA funding “effectively driving the BCI research agenda.”

As we shall see, such projects, many of which focus on somehow enhancing the capabilities of the recipient or wearer of a given piece of technology/augmentation, are making activities like telepathy, mind-control and mind-reading — once the stuff of science fiction — at least plausible, if not tomorrow’s reality.

As McCarthy and Cvitkovic explain on their Substack, for example, the 1999 DARPA-funded Fundamental Research at the [BIO: INFO: MICRO] Interface program led to significant “firsts” in brain-computer interfaces research, including allowing monkeys to learn to control a Brain Machine Interface (BMI) to reach and grab objects without moving their arms. In another project from the program, monkeys learned how to “position cursors on a computer screen without the animals emitting any behavior,” where signals extrapolated from the monkey’s movement “goals” were “read” and decoded to move the mouse.

McCarthy and Cvitkovic also highlight that, in more recent years, DARPA-funded scientists have also “created the world’s most dexterous bionic arm with bidirectional controls,” have used brain-computer interfaces to accelerate memory formation and recalling, and have even “transferred a ‘memory’ (a specific neural-firing pattern) from one rat to another,” where the rat receiving the “memory” almost instantaneously learned to perform a task that typically took weeks of training to learn.

Scientist Miguel Nicolelis discusses an experiment where a monkey uses its thoughts to control a monkey avatar and a robot arm. Filmed at TEDMED 2012.

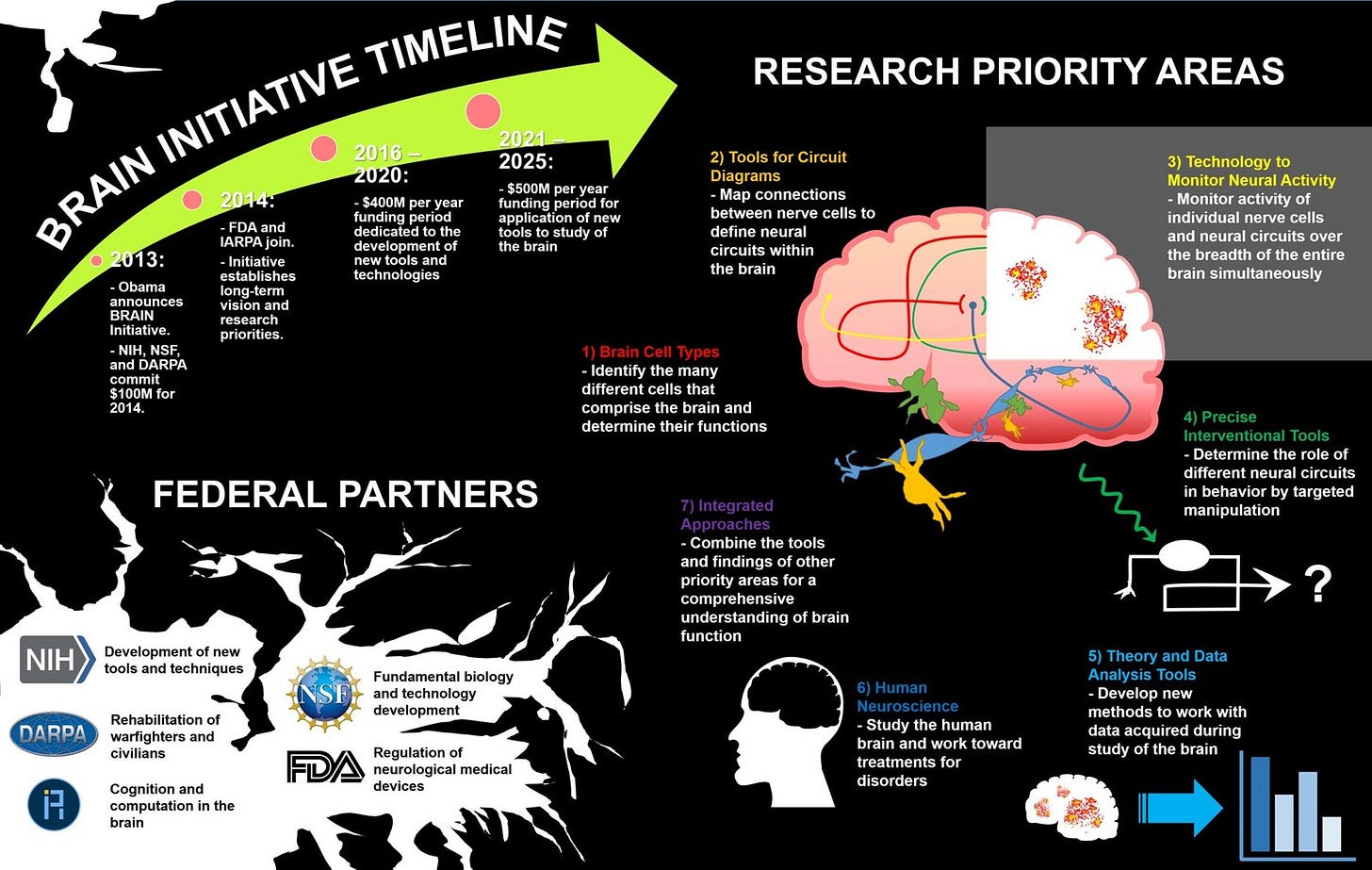

Likewise, the BRAIN (Brain Research through Advancing Innovative Neurotechnologies) Initiative, a US government initiative founded in 2013, is aimed at “revolutionizing our understanding of the human brain” to accelerate the capacities of the neurosciences and neurotechnologies. Inspired by the earlier Human Genome Project, which ran until 2003 and generated the first sequence of the human genome, the BRAIN Initiative markets itself as an initiative working to address common brain disorders, like Alzheimer’s and depression, through intense research of the brain and its operations.

Led by the National Institutes of Health (NIH), the National Science Foundation (NSF), and DARPA, its prominent private partners include the Allen Institute for Brain Science (Paul Allen, the founder of the Institute, was the co-founder of Microsoft), the Howard Hughes Medical Institute, the Kavli Foundation, and the Salk Institute for Biological Studies. This mix of actors effectively makes the BRAIN Initiative an opaque, public-private partnership.

Like many neurotechnology and adjacent initiatives, the BRAIN Initiative depicts itself as a research-forward, public effort that can improve human well-being. Yet, cash flows suggest that its priorities lie more in the military sphere: as per 2013 reporting from Scientific American, DARPA is the biggest funder of the BRAIN Initiative.

What does DARPA’s interest in the BRAIN Initiative amount to, practically speaking? Apparently, the stuff of science fiction.

Indeed, an article titled “DARPA and the Brain Initiative,” (an apparently now-deleted page on DARPA’s website) explores DARPA’s eclectic collaboration with the BRAIN Initiative. Co-projects include the the ElectRx program which “aims to help the human body heal itself through neuromodulation of organ functions” through injectable “ultraminiaturized devices,” the HAPTIX program, which is working on neural-interface “microsystems” that communicate externally “to deliver naturalistic sensations” (especially to make prosthetic limbs “feel” and “touch” naturally), and the RE-NET Program, which aims to create technologies able to “extract information from the nervous system” quickly enough to “control complex machines.” Altogether, such projects apply state-of-the-art technologies to the brain to maximize its utilization in and out of conflict, perhaps one day allowing for self-healing, a rehabilitated sense of “touch” for those with lost limbs, and brain-machine communications systems that utilize thoughts to operate war machinery.

Adjacent neurotech efforts include DARPA’s Next-Generation Nonsurgical Neurotechnology (N³) program, which has a budget of at least $125 million. According to DARPA’s 2018 funding brief for the project, a “neural interface that enables fast, effective, and intuitive hands-free interaction with military systems by able-bodied warfighters is the ultimate program goal.” In plain language, the project is about developing technology that can help warfighters interact and command military infrastructure (planes, drones, bombs, etc.) with their thoughts and without the need for an invasive, Neuralink-style implant.

Meanwhile, Battelle’s N³ funds are developing BrainSTORMS (Brain System to Transmit Or Receive Magnetoelectric Signals), an injectable, Bi-Directional Brain Computer Interface which one day could, in tandem with a helmet, be used by someone to direct or control vehicles, robots, and other instruments with their thoughts.

In addition to investment in neurotech projects facilitating brain-based communications and operations of various technologies, neurotech advancements include improving or “augmenting” the brain’s capacity to operate in myriad ways that will assist fighters on the battlefield. “Enhancements” that claim to improve soldiers’ battlefield performance are not a new phenomenon and have previously included currently illicit drugs, like cocaine. Recent developments in neuroscience have jumpstarted new possibilities, with technologies and techniques including BCIs, neuropharmocologies, and/or electric currents to stimulate the brain potentially, according to the Small Wars Journal, “improv[ing] warfighter performance by enhancing memory, concentration, motivation, and situational awareness while negating the physiological ills of decreased sleep, stress, pain, and traumatic memories.”

Indeed, “augmented cognition” has been an area of focus for DARPA, which worked to develop “technologies capable of extending, by an order of magnitude, the information management capacity of war fighters” in the early 2000s. More recently, University of Florida computer science and information researchers announced in 2022 that they received DARPA’s support to “work to augment human cognition by providing task guidance through augmented reality (AR) headset technology in extreme environments, including high hazard and risky operations.”

And similar initiatives to better understand, and otherwise enhance, the brain and its capacities to take on myriad (especially war-focused) tasks are ongoing. Notably, Spanish researchers developed a “human brain-to-brain interface” in 2014 that would allow humans to communicate with each other by only thinking. The project was funded by the European Commission’s Future and Emerging Technology (FET), which is often described as a DARPA equivalent, indicating international interest in developing adjacent technologies.

Other such efforts around the globe include the EU-funded Human Brain Project (2013-2023), the China Brain Project (CBP), Japan’s Brain/ MINDS Initiative, and Canada’s Brain Canada. Dr. Rafael Yuste (whom I shall discuss in more detail), who helped propose the BRAIN Initiative, is also the coordinator of the International Brain Initiative, which coordinates neurotech efforts and policymaking discussions on the subject at the international level.

Dystopian or not, DARPA and its collaborators and counterparts have been working over the decades to make once-unbelievable activities like brain-to-brain and brain-to-machine communication plausible, if not likely, in the years to come. As we will see, such technologies’ impact on the international stage, the battlefield, and daily life alike will be profound if realized.

…Or Destroy?

Ultimately, the advantages of emerging BCIs and adjacent tools on the battlefield and in conflict are double-sided, as any advancements made to boost a warfighter’s performance can often be applied towards destructive purposes. In neurowarfare, in other words, the brain is capable of being enhanced as well as attacked.

As a 2024 RAND report speculates, if BCI technologies are hacked or compromised, “a malicious adversary could potentially inject fear, confusion, or anger into [a BCI] commander’s brain and cause them to make decisions that result in serious harm.” Academic Nicholas Evans speculates, further, that neuroimplants could “control an individual’s mental functions,” perhaps to manipulate memories, emotions, or even to torture the wearer. Based on these considerations and speculations, if BCIs are used en masse at either the warfighter or civilian level, it seems plausible that some attacks could hone in on the BCIs of hostile persons (warfighters or otherwise) to manipulate the contents of their minds, or even brainwash them in some capacity.

Meanwhile, academic Armin Krishnan even posits that forms of mind control found in nature, such as those utilized by gene-manipulating parasites, could eventually be possible. In a 2016 article on neurowarfare, he wrote:

Microbiologists have recently discovered mind-controlling parasites that can manipulate the behavior of their hosts according to their needs by switching genes on or off. Since human behavior is at least partially influenced by their genetics, nonlethal behavior modifying genetic bioweapons that spread through a highly contagious virus could thus be, in principle, possible.

Krishnan’s observations regarding what’s possible are chilling; the realities of Rice University researchers already having “hacked” into fruit fly brains and commanding their wings via remote control, as previously described, perhaps moreso.

While chemical warfare has largely been banned on the international level, gaps in legislation and enforcement leave room for possibilities of different types of chemical attacks or manipulations that target the brain. In this respect, Krishnan posits that biochemical calmatives and malodorants could incapacitate populations on a mass scale, or oxycontin could otherwise make them docile, subduing them for an enemy’s benefit.

Ultimately, as academics Hai Jin, Li-Jun Hou, and Zheng-Guo Wang posit in the Chinese Journal of Traumatology, putting the brain front-and-center as a military target that can be injured, interfered with, or enhanced could “establish a whole new “brain-land-sea-space-sky” global combat mode.” As I will show, this emerging “brain-land-sea-space-sky” global combat mode appears poised to change how conflicts between nation states are realized and fought entirely.

Neurowarfare as a Geopolitical Force

As the world endures major wars in Ukraine and now the Middle East with Israel’s ongoing destruction of Gaza, “neurowarfare” is also on the horizon. Indeed, the technologies outlined in the previous sections appear slated to transform geopolitical relations as both hard- and soft-power tools, which could then be used to manipulate populations’ life styles, world views, and even cognitive abilities to make them pliable to someone else’s will.

Of course, various soft-power tactics have long worked to influence the minds, political allegiances, and socio-economic realities of civilians in “hostile” territories. The US, for example, has often used extensive propaganda campaigns as part of its “color revolution” efforts for regime change in countries with governments deemed inconvenient to American geopolitical goals.

Yet, neuroweapons, if used on a broad scale, seem positioned to take things to another level. As Georgetown University Neurology and Biochemistry Professor and Director of the Potomac Institute for Policy Studies’ Center for Neurotechnology Studies Dr. James Giordano explains in a 2020 article entitled Redefining Neuroweapons: Emerging Capabilities in Neuroscience and Neurotechnology, neuro-based advancements could theoretically be used to exercise socio-economic power elsewhere, or otherwise disrupt societies in ways that do not involve explicit military action.

Shockingly, he mentions that these disruptions could theoretically be done through the “denigration” of hostile groups’ cognitive or emotional states:

Indeed, neuroS/T [neuroscience and neurotechnology] can be employed as both “soft” and “hard” weapons in competition with adversaries. In the former sense, neuroS/T research and development can be utilized to exercise socio-economic power in global markets, while in the latter sense, neuroS/T can be employed to augment friendly forces’ capabilities or to denigrate the cognitive, emotive, and/or behavioral abilities of hostiles. Furthermore, both “soft” and “hard” weaponized neuroS/T can be applied in kinetic or non-kinetic engagements to incur destructive and/or disruptive effects.

As Giordano elaborates in another article, the “disruptive capabilities” of neuroweaponry make them especially valuable in non-kinetic engagements because they could put the perpetrators at a strategic advantage, where kinetic responses to non-kinetic neuroweaponry, however profound, may appear too aggressive. (In this context, “kinetic” engagements can be best described as overt or hot military engagements, where active and sometimes lethal force is used. Conversely, “non-kinetic” engagements refer to more covert strategies and activities to counter an enemy, including within the diplomatic, digital, economic, and perhaps now the “neuro” spheres.) Giordano goes on to say that if a recipient of neurowarfare does not sufficiently respond to an attack, the neuroweapon’s “disruptive influence and it’s [sic] possible strategically destructive effect become increasingly manifest.” In other words, neurowarfare seems positioned to drive nation states’ geopolitical strategies and how geopolitical tensions fester or explode in the future.

As Giordano has implied via his references to “socio-economic power,” it appears non-kinetic neurowarfare seems likely to impact not only soldiers and military outcomes, but also civilians and the societies they live in, especially as states initiate hostilities. As a 2020 NATO-sponsored study on why “cognitive warfare” matters, “future conflicts will likely occur amongst the people digitally first and physically thereafter in proximity to hubs of political and economic power.”

Namely, as Krishnan notes in a 2016 academic article, it seems possible that neurowarfare could even manipulate political leaders and populations to suppress their free will, enabling perpetrators to assert their political will on entire populations without resorting to kinetic responses. Here, a variety of tools (especially those described earlier in this article) could be used in tandem to disorient, placate, or devastate the masses on a large scale. Krishan writes:

In a defensive function neurowarfare may be used to suppress conflicts before they can break out…Occupied populations could be more easily pacified and incipient insurgencies could be more easily suppressed before they gain any traction. Calmatives could be put into the drinking water or populations could be sprayed with oxytocin to make them more trusting. Potential terrorists may be detected using brain scans and then chemically or otherwise neutered. This obviously creates the possibility of creating a system of high-tech repression, where in the words of writer Aldous Huxley “a method of control [could be established] by which a people can be made to enjoy a state of affairs by which any decent standard they ought not to enjoy.”

As Krishnan mentions, aptly bringing Aldous Huxley’s “Brave New World” prescription for the future into the conversation, current circumstances have set the stage for possible manipulation and top-down,“high-tech repression” at all levels, making it difficult for those experiencing it to even understand their previous freedoms have been stripped from them.

Indeed, Krishnan explains that neurowarfare could transform hostile societies’ culture and values, or even collapse them based on the emotions these technologies could induce:

Offensive neurowarfare would be aimed at manipulating the political and social situation in another state. It could alter social values, culture, popular beliefs, and collective behaviors or change political directions, for example, by way of regime change through ‘democratizing’ other societies…However, offensive neurowarfare could also mean collapsing adversarial states by creating conditions of lawlessness, insurrection, and revolution, for example, by inducing fear, confusion, or anger. Adversarial states could be destabilized using advanced techniques of subversion, sabotage, environmental modification, and ‘gray’ terrorism, followed by a direct military attack. As a result, the adversarial state would not have the capacity to resist the policies of a covert aggressor.

Ultimately, as per the circumstances described by defense and neuroscience/technology analysts and academics in the space, neuroweapons could become an unprecedented new driver of soft power, where minds are a target of influence in ways that were previously unimaginable. Subsequently, in kinetic exchanges, minds could become targets to denigrate or destroy in the world of neurowarfare. However, increasingly it seems that the line between kinetic and non-kinetic is becoming blurred as war moves to target, not just physical reality, but human’s internal reality through the brain.

Neurorights or Neuromarkets?

As emerging neurotechnologies increasingly jeopardize the mind’s sanctity in and outside of war-time conditions, some are calling for the protection of the brain through “neurorights.” Groups like Columbia University’s Neurorights Foundation, whose stated goal is “to protect the human rights of all people from the potential misuse or abuse of neurotechnology,” have sprouted to advocate for the matter, and “neurorights” policy discussions are ongoing in high places, like the European Union and the United Nations Human Rights Council. Chile, meanwhile, has been praised by groups like UNESCO for its legislative efforts in the area, which have included adding brain-related rights to the country’s constitution.

“Neurorights” have been depicted in the media as protections that ensure emerging neurotechnologies are only used for “altruistic purposes.” However, a closer look at neurorights initiatives and adjacent legislation suggests many of those pushing for “neurorights” are in fact facilitating the emerging technologies’ normalization within the consumer market and everyday life through the creation of legislative frameworks. This opens up possibilities for what Unlimited Hangout contributing editor Whitney Webb describes as “neuromarkets.”

Indeed, those backing “neurorights” efforts deserve scrutiny for their close proximity to the very defense industry and adjacent institutions proliferating the controversial neurotechnologies I’ve described earlier in this article. For instance, Dr. Rafael Yuste, who heads Columbia University’s Neurorights Foundation and the university’s Kavli Institute, helped pitch the now heavily DARPA-influenced and funded BRAIN Initiative to the US government. He is also the coordinator of the BRAIN Initiative’s 650 international centers, and has participated in projects like those I outlined earlier in this article. Through research and genetic engineering on mice, for example, Dr. Yuste has “helped pioneer a technology that can read and write to the brain with unprecedented precision,” where he can even “make the mice ‘see’ things that aren’t there.”

Despite Yuste’s proximity to the very organizations researching and promoting questionable neurotechnologies, he’s one of the primary actors behind Chile’s neurorights legislation (as opposed to Chileans). Indeed, the legislation appears less revolutionary within the context of Chile’s legacy as a testing ground for neoliberal policymaking efforts created abroad.

What’s more, legal scholars have argued “neurorights” as proposed are inherently “flawed” from a legal standpoint, with Jan Christoph Bublitz writing that the neurorights proposal “is tainted by neuroexceptionalism and neuroessentialism, and lacks grounding in relevant scholarship.” Alejandra Zúñiga-Fajuri, Luis Villavicencio Miranda, Danielle Zaror Miralles and Ricardo Salas Venegas argue that the neurorights concept is legally “redundant,” and “is based on an outdated ‘Cartesian reductionist’ philosophical thesis, which advocates the need to create new rights in order to shield a specific part of the human body: the brain.”

Whether the legal system is just in the first place is debatable. Still, it’s odd that neurorights legislative proposals are being pushed around the world despite being apparently unable to withstand scrutiny from legal scholars. Indeed, neurorights legislation is under consideration in a number of countries, especially in Latin America, apparently in a manner reminiscent of many recent top-down, global policy initiatives that have come to pass in previous years (i.e. the global response to a novel coronavirus in 2020).

In any case, neurotechnologies like BCIs and their normalization at the consumer level could pose myriad ethical problems. For example, DARPA’s augmented cognition efforts to soup up warfighter brains as described earlier in the article, if brought to the consumer market, could quickly wreak havoc and perhaps even create cognitive inequities if inaccessible to most. As Dr. Yuste himself told the New York Times, “Certain groups will get this tech, and will enhance themselves… This is a really serious threat to humanity.”

To address this alleged problem of “accessibility,” one of the neurorights proposals crafted by Yuste and the Morningside Group (a group of scientists which, after being called together by Yuste, has worked to identify priorities they consider neurorights) is the “right to fair access to mental augmentation.” But it’s not hard to imagine neurorights legislation facilitating a number of dystopian scenarios, as the very availability of such tech may well put economic or social pressure on the general population to receive or use it, perhaps in the forms of state-subsidized BCIs or even state-mandated BCIs for some professions or groups of people.Even those in wealthier countries could cognitively augment themselves in ways unavailable in poorer countries (it seems unlikely, after all, that truly equal access to “cognitive augmentation” could be facilitated internationally), bringing them new, untold advantages with global, geopolitical impacts.

In any case, it’s curious that “equitable access” to cognitive augmentation is being legislated upon through “neurorights initiatives” without substantive debate as to whether such augmentation should be allowed in the first place or is even safe.

Ultimately, rather than protect people from the possible ethical harms of emerging neurotechnologies, neurorights legislation ultimately appears poised to normalize and facilitate the arrival of BCIs and other advanced and often dystopian neurotechnologies discussed in this investigation into daily life.

Neurowarfare: Another Step Towards Transhumanism?

Altogether, ongoing strides to enhance, and in turn, degrade or destroy warfighter capabilities on the battlefield through tools like BCIs and other implantables, neuropharmocologies, and even efforts to augment cognition may well transform the nature of warfare, kinetic or otherwise, as militaries put the brain front and center in conflict.

Touted as a way to sidestep the possible ramifications of these technologies, “neurorights,” which have been proposed by persons closely affiliated with the organizations creating the tech in the first place, ultimately appears to be about normalizing the tech and introducing it to and integrating it within the public sphere.

Critically, the increased and growing presence of neurotechnologies for use in daily life could well normalize and accelerate efforts towards transhumanism, a dystopian goal of many amongst the power elite to unite man and machine in their push for the Fourth Industrial Revolution, a revolution they claim will blur the physical, digital, and biological spheres. After all, if technologies that can read minds, make prosthetic limbs “touch,” or use thoughts to control machines become everyday tools, it seems the sky’s the limit with respect to how humans could use them to transform societies — and themselves, for better or for worse.

Ultimately, such efforts towards transhumanism are being pushed from the top with little room for meaningful public debate. These efforts are also often intertwined with ongoing pushes towards stakeholder capitalism and efforts to hand decision making processes and common infrastructure to an unaccountable private sector through “public-private partnerships.”

Indeed, in light of such advances, both sovereignty and humanity is under attack — on and off the battlefield.

Author

Stavroula Pabst is a writer, comedian, and media PhD student at the National and Kapodistrian University of Athens in Athens, Greece. Her writing has appeared in publications including Propaganda in Focus, Reductress, Al Mayadeen and The Grayzone. Keep up with her work by subscribing to her Substack at stavroulapabst.substack.com.

Source: The Conversation

In a future with more ‘mind reading,’ thanks to neurotech, we may need to rethink freedom of thought

April 9, 2024

Socrates, the ancient Greek philosopher, never wrote things down. He warned that writing undermines memory – that it is nothing but a reminder of some previous thought. Compared to people who discuss and debate, readers “will be hearers of many things and will have learned nothing; they will appear to be omniscient and will generally know nothing.”

These views may seem peculiar, but his central fear is a timeless one: that technology threatens thought. In the 1950s, Americans panicked about the possibility that advertisers would use subliminal messages hidden in movies to trick consumers into buying things they didn’t really want. Today, the U.S. is in the middle of a similar panic over TikTok, with critics worried about its impact on viewers’ freedom of thought.

To many people, neurotechnologies seem especially threatening, although they are still in their infancy. In January 2024, Elon Musk announced that his company Neuralink had implanted a brain chip in its first human subject – though they accomplished such a feat well after competitors. Fast-forward to March, and that person can already play chess with just his thoughts.

Brain-computer interfaces, called BCIs, have rightfully prompted debate about the appropriate limits of technologies that interact with the nervous system. Looking ahead to the day when wearable and implantable devices may be more widespread, the United Nations has discussed regulations and restrictions on BCIs and related neurotech. Chile has even enshrined neurorights – special protections for brain activity – in its constitution, while other countries are considering doing so.

A cornerstone of neurorights is the idea that all people have a fundamental right to determine what state their brain is in and who is allowed to access that information, the way that people ordinarily have a right to determine what is done with their bodies and property. It’s commonly equated with “freedom of thought.”

Many ethicists and policymakers think this right to mental self-determination is so fundamental that it is never OK to undermine it, and that institutions should impose strict limits on neurotech.

But as my research on neurorights argues, protecting the mind isn’t nearly as easy as protecting bodies and property.

Thoughts vs. things

Creating rules that protect a person’s ability to determine what is done to their body is relatively straightforward. The body has clear boundaries, and things that cross it without permission are not allowed. It is normally obvious when a person violates laws prohibiting assault or battery, for example.

The same is true about regulations that protect a person’s property. Protecting body and property are some of the central reasons people come together to form governments.

Generally, people can enjoy these protections without dramatically limiting how others want to live their lives.

The difficulty with establishing neurorights, on the other hand, is that, unlike bodies and property, brains and minds are under constant influence from outside forces. It’s not possible to fence off a person’s mind such that nothing gets in.

Instead, a person’s thoughts are largely the product of other peoples’ thoughts and actions. Everything from how a person perceives colors and shapes to our most basic beliefs are influenced by what others say and do. The human mind is like a sponge, soaking up whatever it happens to be immersed in. Regulations might be able to control the types of liquid in the bucket, but they can’t protect the sponge from getting wet.

Even if that were possible – if there were a way to regulate people’s actions so that they don’t influence others’ thoughts at all – the regulations would be so burdensome that no one would be able to do much of anything.

If I’m not allowed to influence others’ thoughts, then I can never leave my house, because just by my doing so I’m causing people to think and act in certain ways. And as the internet further expands a person’s reach, not only would I not be able to leave the house, I also wouldn’t be able to “like” a post on Facebook, leave a product review, or comment on an article.

In other words, protecting one aspect of freedom of thought – someone’s ability to shield themselves from outside influences – can conflict with another aspect of freedom of thought: freedom of speech, or someone’s ability to express ideas.

Neurotech and control

But there’s another concern at play: privacy. People may not be able to completely control what gets into their heads, but they should have significant control over what goes out – and some people believe societies need “neurorights” regulations to ensure that. Neurotech represents a new threat to our ability to control what thoughts people reveal to others.

There are ongoing efforts, for example, to develop wearable neurotech that would read and adjust the customer’s brainwaves to help them improve their mood or get better sleep. Even though such devices can only be used with the consent of the user, they still take information out of the brain, interpret it, store it and use it for other purposes.

In experiments, it is also becoming easier to use technology to gauge someone’s thoughts. Functional magnetic resonance imaging, or fMRI, can be used to measure changes in blood flow in the brain and produce images of that activity. Artificial intelligence can then analyze those images to interpret what a person is thinking.

Neurotechnology critics fear that as the field develops, it will be possible to extract information about brain activity regardless of whether or not someone wants to disclose it. Hypothetically, that information could one day be used in a range of contexts, from research for new devices to courts of law.

Regulation may be necessary to protect people from neurotech taking information out. For example, nations could prohibit companies that make commercial neurotech devices, like those meant to improve the wearer’s sleep, from storing the brainwave data those devices collect.

Yet I would argue that it may not be necessary, or even feasible, to protect against neurotech putting information into our brains – though it is hard to predict what capabilities neurotech will have even a few years from now.

In part, this is because I believe people tend to overestimate the difference between neurotech and other types of external influence. Think about books. Horror novelist Stephen King has said that writing is telepathy: When an author writes a sentence – say, describing a shotgun over the fireplace – they spark a specific thought in the reader.

In addition, there are already strong protections on bodies and property, which I believe could be used to prosecute anyone who forces invasive or wearable neurotech upon another person.

How different societies will navigate these challenges is an open question. But one thing is certain: With or without neurotech, our control over our own minds is already less absolute than many of us like to think.

Source: Unlimited Hangout

February 19, 2022

Whitney discusses the ulterior motives and background of the individuals behind the push for “neurorights” at the national and international level and why it’s more about making new markets than protecting our rights.

Available on all podcast platforms.

Ways to connect

PGP Fingerprint: 7351 9c62 95cc 8130 d8b1 c877 ec99 9aaf 5b1f b029

Email: thetruthaddict@tutanota.com

Telegram: @JoelWalbert

The Truth Addict Telegram channel

Hard Truth Soldier chat on Telegram

The Truth Addict Media Archive (downloadable documentaries, interviews, movies, TV, stand-up, etc)

Mastodon: @thetruthaddict@noauthority.social

Session: 05e7fa1d9e7dcae8512eed0702531272de14a7f1e392591432551a336feb48357c

Odysee: TruthAddict

Rumble: thetruthaddict09

NoAgendaTube: The Truth Addict

Donations (#Value4Value)

Buy Me a Coffee (One time donations as low as $1)

Bitcoin:

bc1qc9ynhlmgxcdd2mjufqr8fxhf248gqee05unmpg (on chain)

nemesis@getalby.com (lightning)

joelw@fountain.fm (lightning)

+wildviolet72C (PayNym)

Monero:

85KchxraVQcCz14ku7WH9wV7b2xzVR6Cf8msYL5FDpxSPQXrJLdUe2daeNeWJozy7s4tCCq8noPZM7j3zvQaME4DTkPRZJA